ido . The structure of the programs and their command line options are described in Applications.At some points in the process we make use of annotations. The annotations typically originate from another data set called annoset. Naturally, demoset and annoset may designate the same data set.

For clarity, we assume that each data set has its own subdirectory in a VisualSearch directory. So, we start with two directories : VisualSearch/demoset and VideoSearch/annoset.

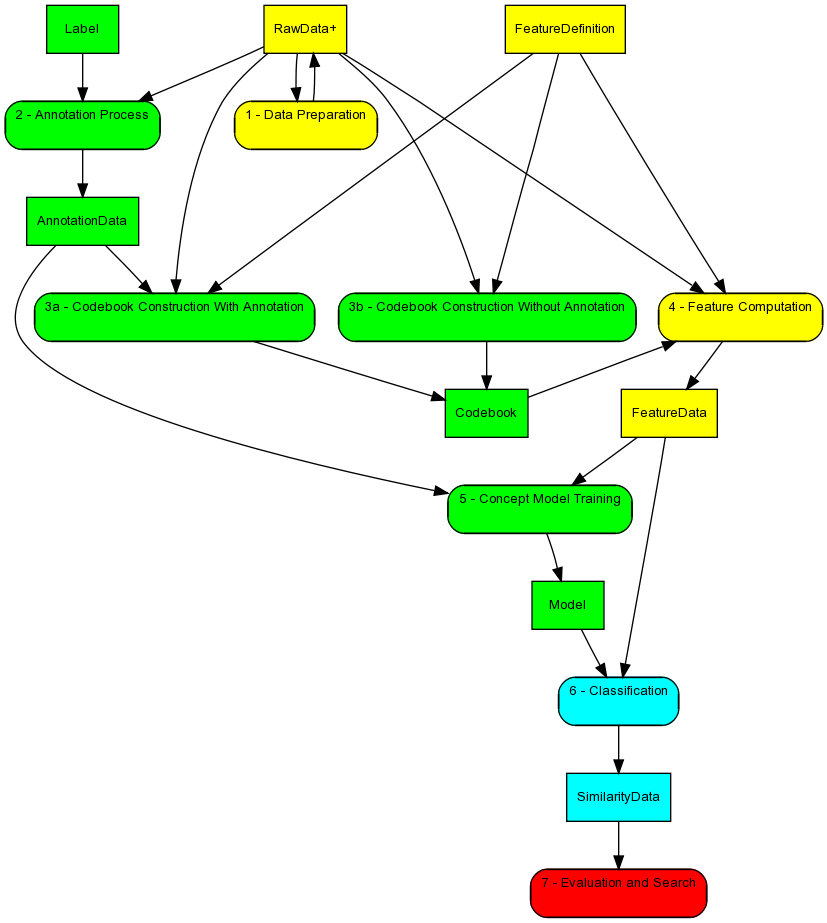

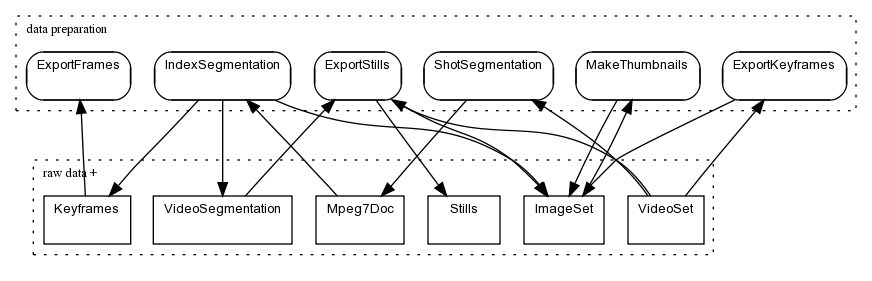

Global Activity Diagram

IDo Pipeline

IDo commands:

Sample usage:

src/script/ido/test/prep.ido:

set -o errexit stageVideos test.txt false export SET=test.txt export NCPU=3 export EXTRA2=yes export FRAMES_JPG=yes cleanPrepTest #export SRCOPT=lavcnoidx shotsegmenter keyframes checkPrepTest

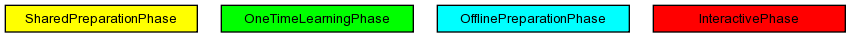

Activity diagram:

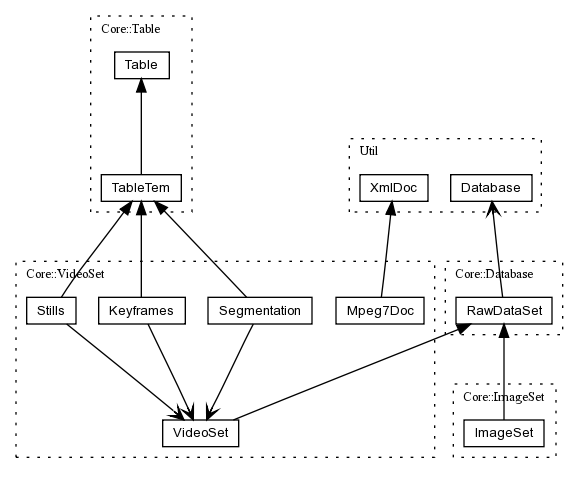

Class diagram:

# generate video set definition with .mpg files on linux cd demoset/VideoData /usr/bin/find . -iname "*.mpg" -print > demoset.txt

Within Impala, the text file is used via the Impala::Core::VideoSet::VideoSet class.

--src lavcwriteidx option will generate an index file for each video to facilitate efficient and reliable random access to frame data in the future.

vidset shotsegmenter demoset.txt --report 100 --src lavcwriteidx

An Impala::Core::VideoSet::ShotSegmenter object produces an Impala::Core::VideoSet::Mpeg7Doc and an Impala::Core::Table::SimilarityTableSet per video. The Mpeg7 documents are stored in MetaData/shots/. The shot boundary similarity scores are stored in SimilarityData/Frames/streamConcepts.txt/no_model/direct/.

vidset indexsegmentation demoset.txt --virtualWalk

An Impala::Core::VideoSet::IndexSegmentation object produces an Impala::Core::VideoSet::Segmentation (stored in VideoIndex/segmentation.tab), an Impala::Core::VideoSet::Keyframes (stored in VideoIndex/keyframes.tab) and Impala::Core::ImageSet::ImageSet 's for keyframes and thumbnails (stored in ImageData/keyframes.txt and ImageData/thumbnails.txt). The shot segmentation may be inspected using repository dumpsegmentation file: demoset.txt and the keyframe definition using repository dumpkeyframes file: demoset.txt .

split archive.

# separate file for each keyframe #vidset exportkeyframes demoset.txt keyframes.txt --keyframes --report 100 --src lavc # keyframes in one archive per video (split archive) vidset exportkeyframes demoset.txt keyframes.txt split 90 --keyframes --report 100 --src lavc

An Impala::Core::VideoSet::ExportKeyframes object does jpg compression on the frames and puts the data in a std::vector of Impala::Core::Array::Array2dScalarUInt8's. After each video Impala::Core::Array::WriteRawListVar writes the data to ImageArchive/keyframes/*/images.raw. The result may be inspected visually using show images.raw .

0.5

# separate file for each thumbnail #imset thumbnails keyframes.txt thumbnails.txt 0.5 --imSplitArchive --report 100 # thumbnails in one archive per video (split archive) #imset thumbnails keyframes.txt thumbnails.txt 0.5 split --imSplitArchive --report 100 # thumbnails in one archive for the whole video set imset thumbnails keyframes.txt thumbnails.txt 0.5 archive --imSplitArchive --report 100

An Impala::Core::ImageSet::Thumbnails object scales the frames and puts the data in a std::vector of Impala::Core::Array::Array2dScalarUInt8's. At the end, Impala::Core::Array::WriteRawListVar writes the data to ImageArchive/thumbnails.raw

vidset exportstills demoset.txt def --stepSize 10000000 --report 1 --src lavc

An Impala::Core::VideoSet::ExportStills object produces a Impala::Core::VideoSet::Stills (stored in VideoIndex/stills) and an Impala::Core::ImageSet::ImageSet (stored in ImageData/stills.txt). The still definition may be inspected using repository dumpstills file: demoset.txt .

--stepSize 10000000 implies that vidset actually skips all frames after providing the first one. The ExportStills object walks over the stills by itself because vidset has no knowledge of the stills.

vidset exportstills demoset.txt data 90 --stepSize 10000000 --report 1 --src lavc

An Impala::Core::VideoSet::ExportStills object does jpg compression on the stills and puts the data in a std::vector of Impala::Core::Array::Array2dScalarUInt8's. After each video Impala::Core::Array::WriteRawListVar writes the data to ImageArchive/stills/*/images.raw.

vidset exportframes demoset.txt split --keyframes --report 100 --src lavc

An Impala::Core::VideoSet::ExportFrames object does png compression on the frames and puts the data in a std::vector of Impala::Core::Array::Array2dScalarUInt8's. After each video Impala::Core::Array::WriteRawListVar writes the data to Frames/*/images.raw. The result may be inspected visually using show images.raw --png .

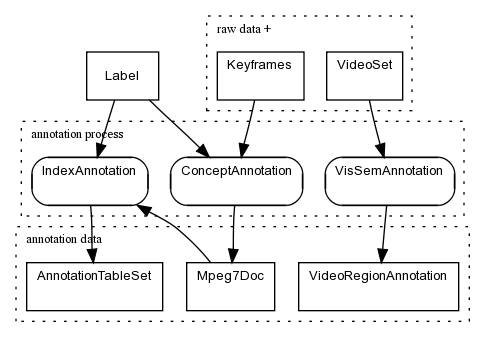

Activity diagram:

Class diagram:

todo

vidbrowse application supports the video region annotation process.

vidbrowse anno annotation.vxs --videoSet trec2005fsd.txt

Jan has assembled an annotation set from the videos in the TRECVID 2005 development set. This annotation set (an Impala::Core::Table::TableVxs) is stored in VideoSearch/trec2005devel/Annotations/annotation.vxs.

annovidset application support the frame annotation process.

annovidset

The set of Labels is defined by a plain text file and is stored in Annotations/concepts.txt. The annotations are stored as an Impala::Core::VideoSet::Mpeg7Doc per Label and per video in MetaData/annotations/concepts.txt/ .

--segmentation option will map the annotations onto shots, i.e. a shot is positive in case it has an overlap with some positive annotation. The --keyframes option will map the annotations onto keyframes.

vidset indexannotation annoset.txt concepts.txt --segmentation --keyframes --virtualWalk

An Impala::Core::VideoSet::IndexAnnotation object produces two Impala::Core::Table::AnnotationTableSet s. The keyframe table is written to Annotations/Frame/concepts.txt/*.tab and the shot table is written to Annotations/Shot/concepts.txt/*.tab. An individual Impala::Core::Table::AnnotationTable may be inspected using repository dumpannotationtable file: annoset.txt concepts.txt concept . An overview of the annotations may be generated using repository dumpannotationtableset file: annoset.txt concepts.txt Frame 0 .

IDo commands:

Sample usage:

src/script/ido/voc2007mini/makeforestAllSurf.ido:

set -o errexit stageVocMini export SET=voc2007mini.txt cleanCluster export CONCEPTS=conceptsVOC2007.txt makeforestSurf makeforestOpponentSurf makeforestRgbSurf checkMakeforestAllSurf

src/script/ido/trec2005fsd_try/sm2.ido:

set -o errexit stage syncfile trec2005fsd_try.txt Annotations/sm2.vxs export SET=trec2005fsd_try.txt export PROTOSET=trec2005fsd_try.txt #export NCPU=2 cleanSm2 system vidset vistrain Annotations/sm2.vxs --videoSet ${SET} --ini ${IMPALAROOT}/src/script/vissem_sm2.ini --report 1 --src lavc system cp ../trec2005fsd_try/Codebooks/sm2.vxs/vissem/vissem_all.txt ../trec2005fsd_try/Codebooks/sm2.vxs/vissem/vissem_proto_sm2_nrScales_2_nrRects_130.txt system vidset viseval ${SET} ${PROTOSET} vissem_proto_sm2_nrScales_2_nrRects_130.txt 0 --keyframes --keyframeSrc --ini ${IMPALAROOT}/src/script/vissem_sm2.ini --report 1 --numberFiles 2 --numberKeyframes 5 --toStore system vidset concatfeatures ${SET} vissem_proto_sm2_nrScales_2_nrRects_130.txt --keyframes --virtualWalk --numberFiles 2 --numberKeyframes 5 --toStore #system vidset indexfeatures ${SET} vissem_proto_sm2_nrScales_2_nrRects_130 --keyframes --virtualWalk --numberFiles 2 --numberKeyframes 5 --toStore checkSm2VissemKeyfr #exit ##vidset visgaborstat ${SET} --keyframes --keyframeSrc --ini ${IMPALAROOT}/src/script/vissem_sm2.ini --report 1 --numberFiles 2 --numberKeyframes 5 > stat.txt system vidset visgabortrain Annotations/sm2.vxs --videoSet ${SET} --ini ${IMPALAROOT}/src/script/vissem_sm2.ini --report 1 --src lavc system cp ../trec2005fsd_try/Codebooks/sm2.vxs/vissemgabor/vissemgabor_all.txt ../trec2005fsd_try/Codebooks/sm2.vxs/vissemgabor/vissemgabor_proto_sm2_nrScales_2_nrRects_130.txt system vidset visgaboreval ${SET} ${PROTOSET} vissemgabor_proto_sm2_nrScales_2_nrRects_130.txt 0 --keyframes --keyframeSrc --rkfMask --ini ${IMPALAROOT}/src/script/vissem_sm2.ini --report 1 --numberFiles 2 --numberKeyframes 5 --toStore system vidset concatfeatures ${SET} vissemgabor_proto_sm2_nrScales_2_nrRects_130.txt --keyframes --rkfMask --virtualWalk --numberFiles 2 --numberKeyframes 5 --toStore #system vidset indexfeatures ${SET} vissemgabor_proto_sm2_nrScales_2_nrRects_130 --keyframes --virtualWalk --numberFiles 2 --numberKeyframes 5 --toStore checkSm2VissemGaborKeyfr

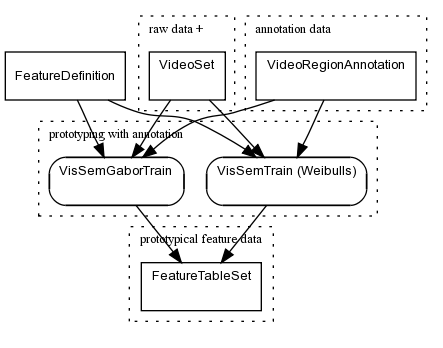

Activity diagram:

Class diagram:

todo

vidset vistrain Annotations/annotation.vxs --videoSet annoset.txt --ini ../script/vissem.ini --report 100

vissem.ini:

# feature parameters nrScales 2 spatialSigma_s0 1.0 spatialSigma_s1 3.0 histBinCount 1001 # region definition borderWidth 15 nrRegionsPerDimMin 2 nrRegionsPerDimMax 6 nrRegionsStepSize 4 # annotation set (for reading only) data .;../annoset protoDataFile annotation.vxs protoDataType vid protoDataSet annoset.txt

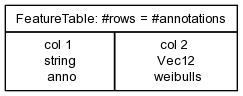

mainVidSet loads the annotations as bookmarks and gives each one to an Impala::Core::VideoSet::VisSemTrain object to process it. The result is an Impala::Core::Feature::FeatureTableSet with two Impala::Core::FeatureTable s (one for each scale : sigma=1.0 and sigma=3.0. Each table contains two Weibull parameters of six features (Wx, Wy, Wlx, Wly, Wllx, and Wlly) for all annotations.

The tables are stored in Codebooks/annotation.vxs/vissem/ .

vidset visgabortrain Annotations/annotation.vxs --videoSet annoset.txt --ini ../script/vissem.ini --report 100

An Impala::Core::VideoSet::VisSemGaborTrain object produces an Impala::Core::Feature::FeatureTableSet that is stored in Codebooks/annotation.vxs/vissemgabor/ .

IDo commands:

Sample usage:

src/script/ido/imsearchtest/clusterSift.ido:

set -o errexit export SET=imsearchtest.txt export NCPU=1 cleanCluster export LIMIT=100 export CLUSTERS=25 export RANDOMIZE="--noRandomizeSeed" clusterSift checkClusterSift #echo "Note: this test only works in sequential mode since random numbers are used"

src/script/ido/imsearchtest/clusterSurf.ido:

set -o errexit export SET=imsearchtest.txt export NCPU=1 cleanCluster export LIMIT=100 export CLUSTERS=25 export RANDOMIZE="--noRandomizeSeed" clusterSurf checkClusterSurf #echo "Note: this test only works in sequential mode since random numbers are used"

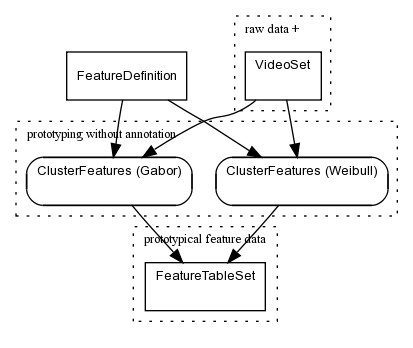

Activity diagram:

Class diagram:

todo

vidset clusterfeatures demoset.txt weibull "radius;weisim;1.0;1.5;0.5" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt weibull "radius;weisim;2.0;2.5;0.5" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt weibull "radius;weisim;3.0;3.5;0.5" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25

vissemClusterSP.ini:

doTemporalSmoothing 0 doTemporalDerivative 0 temporalSigma 0.75 # feature parameters nrScales 2 spatialSigma_s0 1.0 spatialSigma_s1 3.0 doC 0 doRot 0 histBinCount 1001 # region definition borderWidth 15 nrRegionsPerDimMin 2 #nrRegionsPerDimMax 14 nrRegionsPerDimMax 10 nrRegionsStepSize 4 # Lazebnik Spatial Pyramid (cvpr'06) # 1x1 = level 0 = no spatial pyramid # set number of 'bins' per dimension nrLazebnikRegionsPerDimMinX 1 nrLazebnikRegionsPerDimMaxX 1 nrLazebnikRegionsStepSizeX 2 nrLazebnikRegionsPerDimMinY 1 nrLazebnikRegionsPerDimMaxY 3 nrLazebnikRegionsStepSizeY 2 overlapLazebnikRegions 0 protoDataFile clusters

An Impala::Core::VideoSet::ClusterFeatures object with an Impala::Core::Feature::VisSem object is given each keyframe and uses an Impala::Core::Feature::Clusteror object to cluster the features. The result is an Impala::Core::Feature::FeatureTableSet with an Impala::Core::FeatureTable for each parameter setting.

The tables are stored in Codebooks/clusters/vissem/ .

vidset clusterfeatures demoset.txt gabor "radius;histint;5.5;5.5;1.0" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt gabor "radius;histint;6.0;6.0;1.0" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt gabor "radius;histint;6.5;6.5;1.0" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt gabor "radius;histint;7.0;7.0;1.0" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt gabor "radius;histint;7.5;7.5;1.0" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt gabor "radius;histint;8.0;8.0;1.0" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt gabor "radius;histint;8.5;8.5;1.0" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25 vidset clusterfeatures demoset.txt gabor "radius;histint;9.0;9.0;1.0" start 500 5000 --keyframes --keyframeSrc --ini ../script/vissemClusterSP.ini --report 25

An Impala::Core::VideoSet::ClusterFeatures object with an Impala::Core::Feature::VisSem object is given each keyframe and uses an Impala::Core::Feature::Clusteror object to cluster the features. The result is an Impala::Core::Feature::FeatureTableSet with an Impala::Core::FeatureTable for each parameter setting.

The tables are stored in Codebooks/clusters/vissemgabor/ .

IDo commands:

Sample usage:

src/script/ido/imsearchtest/featureWG.ido:

set -o errexit export SET=imsearchtest.txt export PROTOSET=reference_model.txt export NCPU=3 cleanFeature featureWG checkFeatureWG

src/script/ido/imsearchtest/featureSift.ido:

set -o errexit export SET=imsearchtest.txt export PROTOSET=reference_model.txt export NCPU=4 cleanFeature featureHarrisSift featureDense2Sift checkFeatureSift

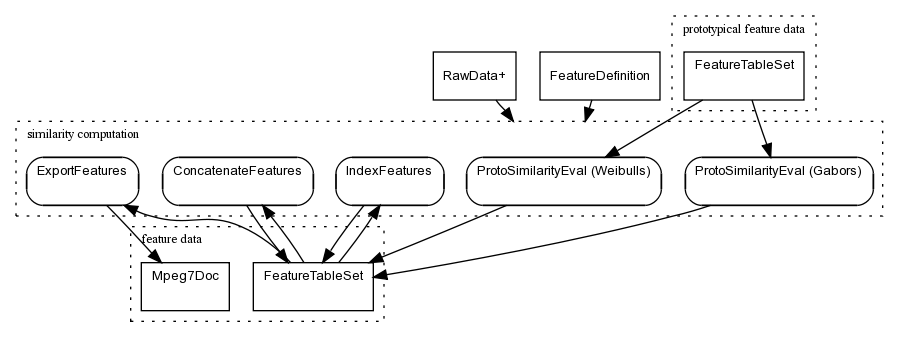

Activity diagram:

Class diagram:

todo

vidset viseval demoset.txt --keyframes --keyframeSrc --ini ../script/vissem.ini --report 10

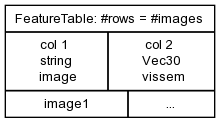

An Impala::Core::VideoSet::ProtoSimilarityEval object with an Impala::Core::Feature::VisSem object is given each keyframe and computes its similarity to all proto concepts. First, the image is divided into a number of rectangles of varying size (size is defined by regions/dimension). For each of the rectangles features are computed and compared to the features of all annotations using a Weibull similarity function. The average similarity for all annotations of one concept is stored in an Impala::Core::Feature::FeatureTable as an intermediate result. The final result is computed by taking both average and maximum of the similarities for each combination of a sigma and a rectangle size. The result is an Impala::Core::Feature::FeatureTableSet with four Impala::Core::Feature::FeatureTable s: vissem_proto_annotation_scale_1_rpd_2, vissem_proto_annotation_scale_1_rpd_6, vissem_proto_annotation_scale_3_rpd_2, and vissem_proto_annotation_scale_3_rpd_6 (rpd stands for region/dimension). The feature vector of one of these tables typically contains 30 values : average and maximum similarity to 15 proto concepts.

The tables are stored per video in FeatureData/Keyframes/vissem/vid.mpg/ .

vidset concatfeatures demoset.txt vissem_proto_annotation_nrScales_2_nrRects_130 \

vissem_proto_annotation_scale_1_rpd_2 vissem_proto_annotation_scale_1_rpd_6 \

vissem_proto_annotation_scale_3_rpd_2 vissem_proto_annotation_scale_3_rpd_6 \

--keyframes --virtualWalk

An Impala::Core::VideoSet::ConcatFeatures object is given each video id (the --keyframe and --virtualWalk option trick tell the Walker not to look for any actual data) to locate tables from an Impala::Core::Feature::FeatureTableSet and concatenate them into a single Impala::Core::Feature::FeatureTable. The result is stored in FeatureData/Keyframes/vissem/vid.mpg/ and may be inspected using table dumpfeaturetable vissem_proto_annotation_nrScales_2_nrRects_130.tab .

vidset visegaborval demoset.txt --keyframes --keyframeSrc --ini ../script/vissem.ini --report 10

An Impala::Core::VideoSet::ProtoSimilarityEval object with an Impala::Core::Feature::VisSemGabor object produces an Impala::Core::Feature::FeatureTableSet. The tables are stored per video in FeatureData/Keyframes/vissemgabor/vid.mpg/ .

vidset concatfeatures demoset.txt vissemgabor_proto_annotation_nrScales_2_nrRects_130 \

vissemgabor_proto_annotation_scale_2.828_rpd_2 vissemgabor_proto_annotation_scale_2.828_rpd_6 \

vissemgabor_proto_annotation_scale_1.414_rpd_2 vissemgabor_proto_annotation_scale_1.414_rpd_6 \

--keyframes --virtualWalk

An Impala::Core::VideoSet::ConcatFeatures object is given each video id (the --keyframe and --virtualWalk option trick tell the Walker not to look for any actual data) to locate tables from an Impala::Core::Feature::FeatureTableSet and concatenate them into a single Impala::Core::Feature::FeatureTable. The result is stored in FeatureData/Keyframes/vissemgabor/vid.mpg/ and may be inspected using table dumpfeaturetable vissemgabor_proto_annotation_nrScales_2_nrRects_130.tab .

vidset concatfeatures demoset.txt fusionvissemgabor_proto_annotation_nrScales_2_nrRects_130 \

vissem_proto_annotation_nrScales_2_nrRects_130 vissemgabor_proto_annotation_nrScales_2_nrRects_130 \

--keyframes --virtualWalk

An Impala::Core::VideoSet::ConcatFeatures object is given each video id (the --keyframe and --virtualWalk option trick tell the Walker not to look for any actual data) to locate tables from an Impala::Core::Feature::FeatureTableSet and concatenate them into a single Impala::Core::Feature::FeatureTable. The result is stored in FeatureData/Keyframes/fusionvissemgabor/vid.mpg/ and may be inspected using table dumpfeaturetable fusionvissemgabor_proto_annotation_nrScales_2_nrRects_130.tab .

vidset exportfeatures demoset.txt 25 vissem_proto_annotation_nrScales_2_nrRects_130 --keyframes --virtualWalk --segmentation vidset exportfeatures demoset.txt 25 vissemgabor_proto_annotation_nrScales_2_nrRects_130 --keyframes --virtualWalk --segmentation

An Impala::Core::VideoSet::ExportFeatures object transfers data from Impala::Core::Feature::FeatureTable s with the given Impala::Core::Feature::FeatureDefinition into an Impala::Core::VideoSet::Mpeg7Doc. The result is stored in MetaData/features/*.mpg/vissem.xml and MetaData/features/*.mpg/vissemgabor.xml.

vidset indexfeatures demoset.txt vissem_proto_annotation_nrScales_2_nrRects_130 --keyframes --virtualWalk vidset indexfeatures demoset.txt vissemgabor_proto_annotation_nrScales_2_nrRects_130 --keyframes --virtualWalk vidset indexfeatures demoset.txt fusionvissemgabor_proto_annotation_nrScales_2_nrRects_130 --keyframes --virtualWalk

An Impala::Core::VideoSet::IndexFeatures object assembles FeatureTables with the given Impala::Core::Feature::FeatureDefinition (stored per video) into one table. The result table is stored in FeatureIndex/vissem/, FeatureIndex/vissemgabor, and FeatureIndex/fusionvissemgabor.

IDo commands:

Sample usage:

src/script/ido/voc2007devel/modelPreBoth2SiftP112213.ido:

set -o errexit export SET=voc2007devel.txt export CONCEPTS=conceptsVOC2007.txt export MODEL=chi2 export NCPU=25 cleanModel precomputeBoth2SiftP112213 checkPrecomputeBoth2SiftP112213 export NCPU=2 modelPreBoth2SiftP112213 checkModelBoth2SiftP112213

src/script/ido/voc2007devel/modelFikBoth2SiftP112213.ido:

set -o errexit export SET=voc2007devel.txt export CONCEPTS=conceptsVOC2007.txt export MODEL=hik export NCPU=25 cleanModel precomputeBoth2SiftP112213 checkPrecomputeBoth2SiftP112213 export NCPU=2 export FIK=1 export MODELBINS=100 modelPreBoth2SiftP112213 checkModelBoth2SiftP112213

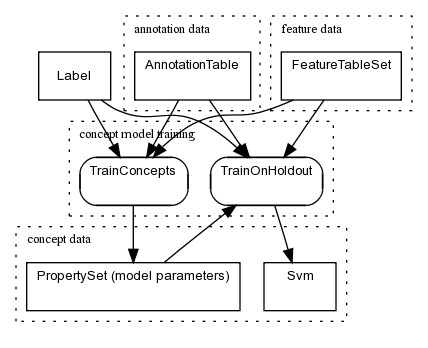

Activity diagram:

Class diagram:

Todo

trainconcepts annoset.txt concepts.txt modelname vissem_proto_annotation_nrScales_2_nrRects_130 --ini ../script/train.ini trainconcepts annoset.txt concepts.txt modelname vissemgabor_proto_annotation_nrScales_2_nrRects_130 --ini ../script/train.ini trainconcepts annoset.txt concepts.txt modelname fusionvissemgabor_proto_annotation_nrScales_2_nrRects_130 --ini ../script/train.ini

train.ini:

w1 [log0:3] w2 [log0:3] gamma [log-2:2] kernel rbf repetitions 1 episode-constrained 1 cache 900

Impala::Samples::mainTrainConcepts uses Impala::Core::Table::AnnotationTable s to assemble Impala::Core::Feature::FeatureTable s for positive and negative examples. An Impala::Core::Training::ParameterSearcher object produces an Impala::Util::PropertySet with the best model parameters. The result is stored in ConceptModels/concepts.txt/modelname/vissem/*.best.

trainonholdout annoset.txt concepts.txt modelname vissem_proto_annotation_nrScales_2_nrRects_130 --ini ../script/train.ini trainonholdout annoset.txt concepts.txt modelname vissemgabor_proto_annotation_nrScales_2_nrRects_130 --ini ../script/train.ini trainonholdout annoset.txt concepts.txt modelname fusionvissemgabor_proto_annotation_nrScales_2_nrRects_130 --ini ../script/train.ini

Impala::Samples::mainTrainConcepts uses Impala::Core::Table::AnnotationTable s to assemble Impala::Core::Feature::FeatureTable s for positive and negative examples. An Impala::Core::Training::Svm object uses the best model parameters to compute an SVM model and stores it in ConceptModels/concepts.txt/modelname/vissem/*.model. The performance measure in stored in ConceptModels/concepts.txt/modelname/vissem/*.ScoreOnSelf.

IDo commands:

Sample usage:

src/script/ido/voc2007test/classifyPreBoth2SiftP112213.ido:

set -o errexit export SET=voc2007test.txt export CONCEPTS=conceptsVOC2007.txt export MODEL=chi2 export ANNOSET=voc2007devel.txt export ANNOWALKTYPE=Image export NCPU=20 export PPN=5 cleanClassify export COMPUTEKERNELDATA=1 classifyPreBoth2SiftP112213 checkClassifyPreBoth2SiftP112213 mapPreBoth2SiftP112213

src/script/ido/voc2007test/classifyFikBoth2SiftP112213.ido:

set -o errexit export SET=voc2007test.txt export CONCEPTS=conceptsVOC2007.txt export MODEL=hik-approx-100 export ANNOSET=voc2007devel.txt export ANNOWALKTYPE=Image export NCPU=20 export PPN=5 cleanClassify export FIK=1 classifyPreBoth2SiftP112213 checkClassifyPreBoth2SiftP112213 mapPreBoth2SiftP112213

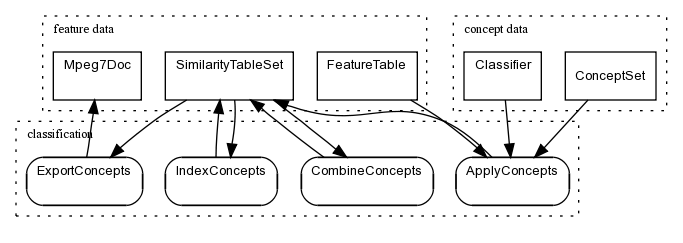

Activity diagram:

Class diagram:

todo

vidset applyconcepts demoset.txt annoset.txt concepts.txt modelname vissem_proto_annotation_nrScales_2_nrRects_130 \

--keyframes --virtualWalk --data ".;../annoset"

vidset applyconcepts demoset.txt annoset.txt concepts.txt modelname vissemgabor_proto_annotation_nrScales_2_nrRects_130 \

--keyframes --virtualWalk --data ".;../annoset"

vidset applyconcepts demoset.txt annoset.txt concepts.txt modelname fusionvissemgabor_proto_annotation_nrScales_2_nrRects_130 \

--keyframes --virtualWalk --data ".;../annoset"

An Impala::Core::VideoSet::ApplyConcepts object creates an Impala::Core::Feature::ConceptSet with the given concepts. For each concept an Impala::Core::Training::Classifier is instantiated and asked to score all features in the Impala::Core::Feature::FeatureTable found for each video. The result is an Impala::Core::Table::SimilarityTableSet with concept similarities as well as a ranking per video. It is stored in SimilarityData/Keyframes/concepts.txt/modelname/vissem/vid.mpg/.

vidset combineconcepts demoset.txt concepts.txt modelname combined avg vissem_proto_annotation_nrScales_2_nrRects_130 \

vissemgabor_proto_annotation_nrScales_2_nrRects_130 fusionvissemgabor_proto_annotation_nrScales_2_nrRects_130 \

--keyframes --virtualWalk

An Impala::Core::VideoSet::CombineConcepts object loads an Impala::Core::Table::SimilarityTableSet for each feature definition. The scores in the SimTable's are averaged and ranked. The result is again a SimilarityTableSet and is stored in SimilarityData/Keyframes/concepts.txt/modelname/combnied/vid.mpg/.

vidset exportconcepts demoset.txt 25 concepts.txt modelname combined --keyframes --virtualWalk --segmentation

An Impala::Core::VideoSet::ExportConcepts object transfers data from Impala::Core::Table::SimilarityTableSet with the given Impala::Core::Feature::FeatureDefinition into an Impala::Core::VideoSet::Mpeg7Doc. The result is stored in MetaData/similarities/concepts.txt/vid.mpg/concept.xml.

vidset indexconcepts demoset.txt concepts.txt modelname combined --keyframes --virtualWalk

An Impala::Core::VideoSet::IndexConcepts object assembles Impala::Core::Table::SimilarityTableSet s related to the given Impala::Core::Feature::FeatureDefinition (stored per video) into one table. The resulting set of tables is stored in SimilarityIndex/concepts.txt/modelname/combined/concept_*.tab.

IDo commands:

Sample usage:

src/script/ido/voc2007test/classifyPreBoth2SiftP112213.ido:

set -o errexit export SET=voc2007test.txt export CONCEPTS=conceptsVOC2007.txt export MODEL=chi2 export ANNOSET=voc2007devel.txt export ANNOWALKTYPE=Image export NCPU=20 export PPN=5 cleanClassify export COMPUTEKERNELDATA=1 classifyPreBoth2SiftP112213 checkClassifyPreBoth2SiftP112213 mapPreBoth2SiftP112213

showvidset

showvidset.ini:

data . videoSet demoset.txt imageSetThumbnails thumbnails.txt imageSetKeyframes keyframes.txt imageSetStills stills.txt conceptSet concepts.txt #conceptSetSubDir vissem_proto_annotation_nrScales_2_nrRects_130 #conceptSetSubDir vissemgabor_proto_annotation_nrScales_2_nrRects_130 #conceptSetSubDir fusionvissemgabor_proto_annotation_nrScales_2_nrRects_130 featureSet vissem_proto_annotation_nrScales_2_nrRects_130;vissemgabor_proto_annotation_nrScales_2_nrRects_130 conceptAnnotations concepts.txt imageStills 1

trecsearch

trecsearch.ini:

videoSet demoset.txt imageSetThumbnails thumbnails.txt imageSetKeyframes keyframes.txt #als in commentaar, geen stills: imageSetStills stills.txt #xTextSearchServer http://licor.science.uva.nl:8000/axis/services/TextSearchWS #xTextSuggestServer http://licor.science.uva.nl:8000/axis/services/DetectorSuggestWS #xRegionQueryServer http://146.50.0.57:8080/axis/QueryGateway.jws maxImagesOnRow 2 initialBrowser 2 xTextSearchSet test # xTextSearchSet devel xTextSearchYear 2006 year 2006 trecTopicSet topics.2006.xml threadFile tv2006_threads #judgeFile search.qrels.tv05 #searchTopicImages mixed.txt #searchTopicImages trec2006topic_keyframes.txt #searchTopicThumbnails trec2006topic_thumbnails.txt conceptSet tv2006_interactive conceptCat tv2006_categories conceptStripToDot 0 conceptStripExtension 1 conceptQuality tv2006_quality conceptMapping tv2006_mapping

1.5.1

1.5.1